...

Short Description of the Use Case

Introduction to the statistical analysis problem

In this exercise, you will perform a binary classification task using Monte Carlo simulated samples representing the Vector Boson Fusion (VBF) Higgs boson production in the four-lepton final state signal and its main background processes at the Large Hadron Collider (LHC) experiments. Two Machine Learning (ML) algorithms will be implemented: an Artificial Neural Network (ANN) and a Random Forest (RF).

- You will learn how a multivariate analysis algorithm works (see the below introduction) and more specifically how a Machine Learning model must be implemented;

- you will acquire basic knowledge about the *Higgs boson physics* as described by the Standard Model. During the exercise you will be invited to plot some physical quantities in order to understand what is the underlying Particle Physics problem;

- you will be invited to *change hyperparameters* of the ANN and the RF parameters to understand better what are the consequences in terms of the models' performances;

- you will understand that the choice of the *input variables* is a key task of a Machine Learning algorithm since an optimal choice allows achieving the best possible performances;

- moreover, you will have the possibility of changing the background datasets, the decay channels of the final state particles, and seeing how the ML algorithms' performance changes.

Multivariate Analysis and Machine Learning algorithms: basic concepts

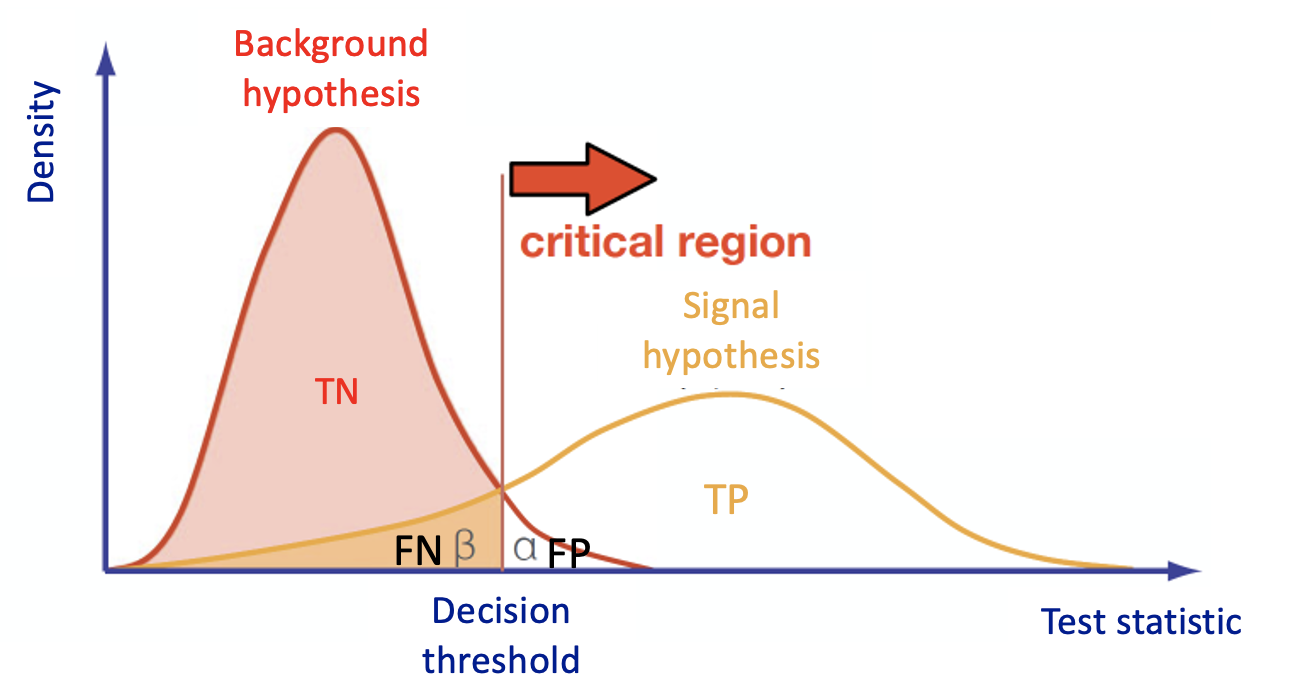

Multivariate Analysis algorithms receive as input a set of discriminating variables. Each variable alone does not allow to reach an optimal discrimination power between two categories (we will focus on a binary task in this exercise). Therefore the algorithms compute an output that combines the input variables.

This is what every Multivariate Analysis (MVA) discriminator does. The discriminant output, also called discriminator, score, or classifier, is used as a test statistic and is then adopted to perform the signal selection. It could be used as a variable on which we decide to cut in a hypothesis test.

In particular, Machine Learning tools are models that have enough capacity to define their own internal representation of the data to accomplish a task: learning from data and make predictions without being explicitly programmed to do so.

In the case of binary classification, firstly the algorithm is trained with two datasets:

- one that contains events distributed according to the null (in our case signal, there exists another convention in actual physics analysis) hypothesis H0 ;

- another data set according to the alternative (in our case background) hypothesis H1.

and it must learn how to classify new datasets (the test dataset in our case).

This means that we have the same set of features (random variables) with their own distribution on the H0 and H1 hypotheses.

To obtain a good ML classifier with high discriminating power, we will follow the following steps:

- Training (learning): a discriminator is built by using all the input variables. Then, the parameters are iteratively modified by comparing the discriminant output to the true label of the dataset (supervised machine learning algorithms, we will use two of them). This phase is crucial, one should tune the input variables and the parameters of the algorithm!

- Alternatively, algorithms that group and find patterns in the data according to the observed distribution of the input data are called unsupervised learning.

- A good habit is training multiple models with various hyperparameters on a “reduced” training set ( i.e. the full training set minus the so-called validation set), and then select the model that performs best on the validation set. If you have the possibility of having more than one validation set, you can do a so-called cross-validation check (we will do it on the RF algorithm).

- Once, the validation process is over, you re-train the best model on the full training set (including the validation set), and this gives you the final model

- Alternatively, algorithms that group and find patterns in the data according to the observed distribution of the input data are called unsupervised learning.

- Test: once the training has been performed, the discriminator score is computed in a separated, independent dataset for both H0 and H1.

- An overfitting check is performed between test and training classifier and their performances are computed (e.g. in terms of ROC curves).

- If the test fails, and the performances of the test and training are different, it is a symptom of overtraining and the model is not good!

Particle Physics basic concepts: the Standard Model and the Higgs boson

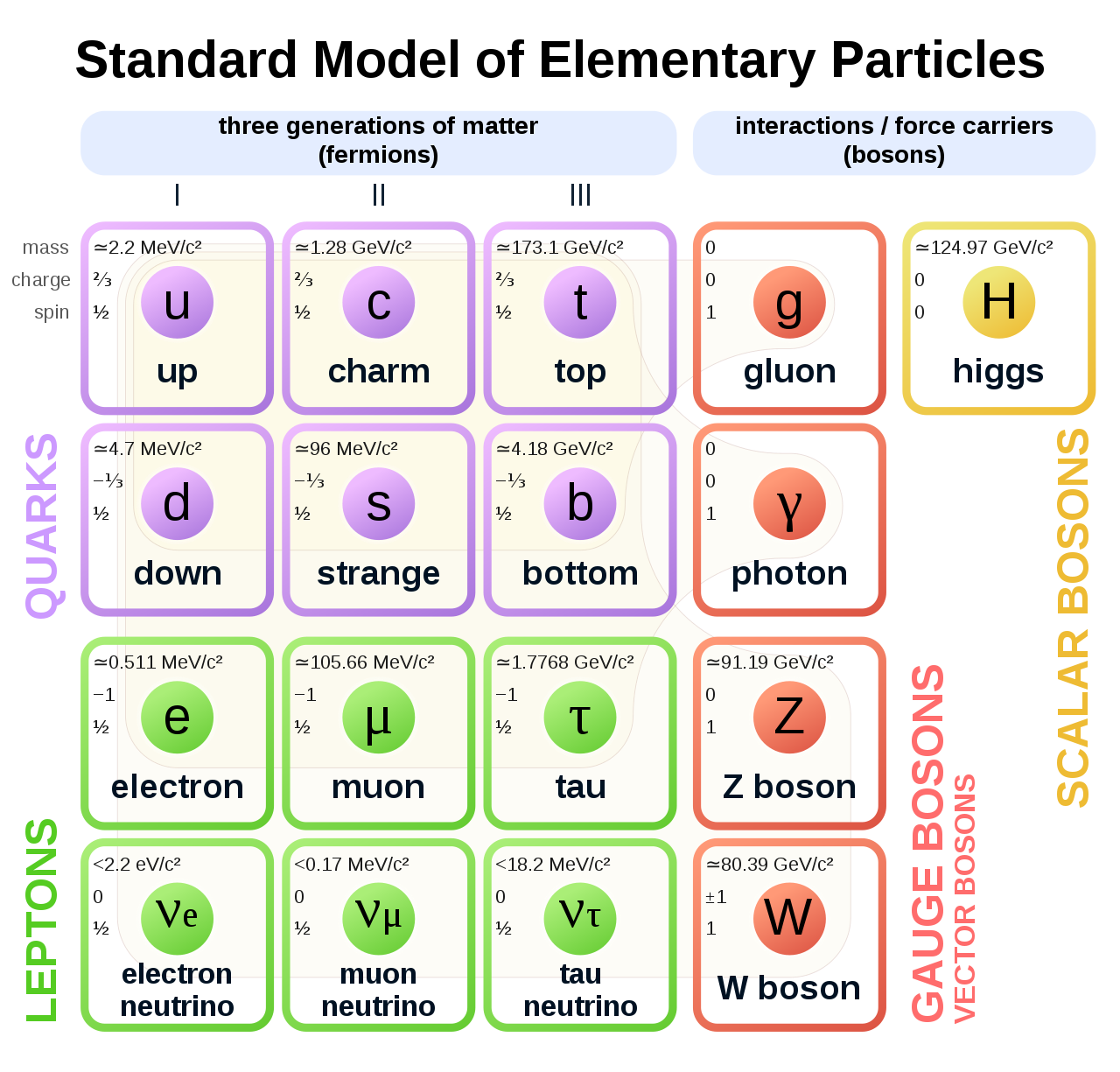

The Standard Model of elementary particles represents our knowledge of the microscopic world. It describes the matter constituents (quarks and leptons) and their interactions (mediated by bosons), which are the electromagnetic, the weak, and the strong interactions.

Among all these particles, the Higgs boson still represents a very peculiar case. It is the second heaviest known elementary particle (mass of 125 GeV) after the top quark (175 GeV).

The ideal tool for measuring the Higgs boson properties is a particle collider. The Large Hadron Collider (LHC), situated nearby Geneva, between France and Switzerland, is the largest proton-proton collider ever built on Earth. It consists of a 27 km circumference ring, where proton beams are smashed at a center-of-mass energy of 13 TeV (99.999999% of the speed of light). At the LHC, 40 Million collisions / second occurs, providing an enormous amount of data. Thanks to these data, ATLAS and CMS experiments discovered the missing piece of the Standard Model, the Higgs boson, in 2012.

During a collision, the energy is so high that protons are "broken" into their fundamental components, i.e. quarks and gluons, that can interact together, producing particles that we don't observe in our everyday life, such as the Higgs boson. The production of a Higgs boson via a vector boson fusion (VBF) mechanism is, by the way, a relatively "rare" phenomenon, since there are other physical processes that occur way more often, such as those initiated by strong interaction, producing the Higgs boson by the so-called gluon-gluon fusion (ggH) production process. In High Energy Physics, we speak about the cross-section of a physics process. We say that the Higgs boson production via the vector boson fusion mechanism has a smaller cross-section than the production of the same boson (scalar particle) via the ggH mechanism.

The experimental consequence is that distinguishing the two processes, which are characterized by the decay products, can be extremely difficult, given that the latter phenomenon has a way larger probability to happen. In the exercise, we will propose to merge different backgrounds to be distinguished from the signal events.

Experimental signature of the Higgs boson in a particle detector

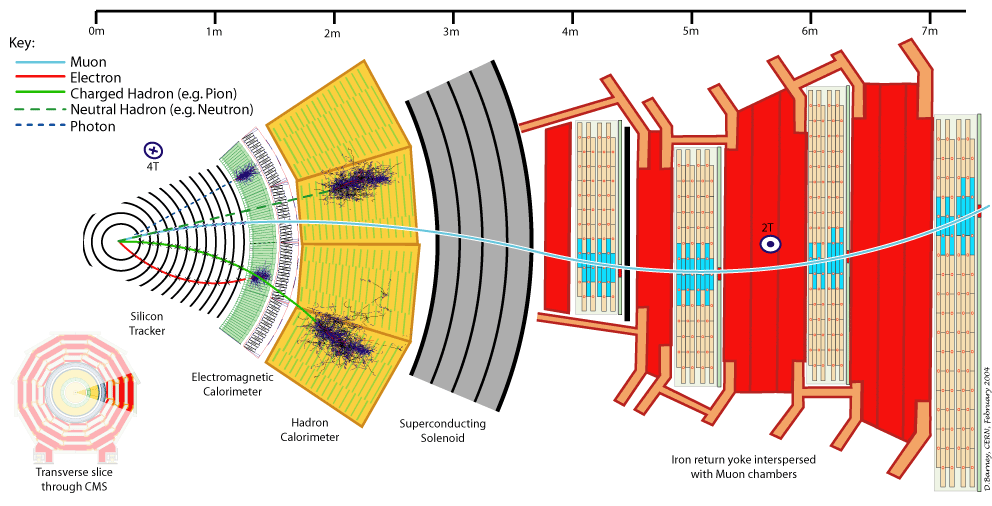

Let's first understand what are the experimental signatures and how the LHC's detectors work. As an example, this is a sketch of the Compact Muon Solenoid (CMS) detector.

A collider detector is organized in layers: each layer is able to distinguish and measure different particles and their properties. For example, the silicon tracker detects each particle that is charged. The electromagnetic calorimeter detects photons and electrons. The hadronic calorimeter detects hadrons (such as protons and neutrons). The muon chambers detect muons (that have a long lifetime and travel through the inner layers).

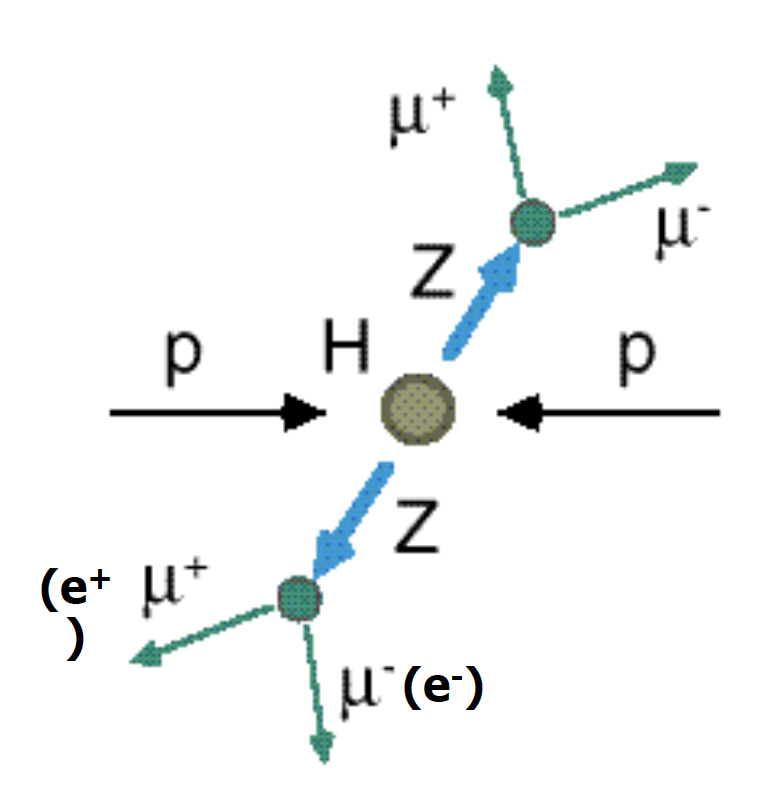

Our physics problem consists of detecting the so-called golden channel H→ZZ*→l+ l- l'+ l'- which is one of the possible Higgs boson's decays: its name is due to the fact that it has the clearest and cleanest signature of all the possible Higgs boson decay modes. The decay chain is sketched here: the Higgs boson decays into Z boson pairs, which in turn decay into a lepton pair (in the picture, muon-antimuon or electron-positron pairs). In this exercise, we will use only datasets concerning the 4mu decay channel and the datasets about the 4e channel are given to you to be analyzed as an optional exercise. At the LHC experiments, the decay channel 2e2mu is also widely analyzed.

How to execute it

Use Googe Colab

...