If your use case has needs related to parallel computing, i.e. multinode-MPI or needs special computing resources such as Intel Manycore or NVIDIA GPUs, probably you need to access the CNAF HPC cluster, which is a small cluster (about 100TFLOPS) but with special HW available [9]. The user needs a different account, in the HPC group, to access the HPC resources.

This cluster is accessible via the LSF batch system for which you can find the instruction here [10] and Slurm Workload Manager which will be introduced hereafter. Please keep in mind that the LSF solution is currently in its EOL phase and will be fully substituted by Slurm Workload Manager in the near future.

In order to access the HPC cluster, the user will need different account, belonging to the HPC group. To obtain it, please follow the Account Request paragraph below:

- Account Request

- Download and fill in the access authorization form. If help is needed, please contact us at hpc-support@lists.cnaf.infn.it

- If you don't have an INFN association, please attach a scan of a personal document of yours (passport or ID card for example)

- Send the form via mail to sysop@cnaf.infn.it and to user-support@lists contact User Support (user-support<at>lists.cnaf.infn.it) if interested.

...

- Once the account creation is completed, you will receive a confirmation on your email address. In that occasion, you will receive the credentials you need to access the cluster as well.

- Access

SSH into bastion.cnaf.infn.it with your credentials.

Access the cluster logging into : ui-hpc.cr.cnaf.infn.it from bastion. You have to use the same credentials you used to log into bastion.

Your home directory will be: /home/HPC/<your_username>and . The home folder is shared among all the cluster nodes.

No quotas are currently enforced on the home directories and about only 4TB are available in the /home partition. In the case you need more disk space for data and checkpointing, every user can access the following directory:

/storage/gpfs_maestro/hpc/user/<your_username>/

which is on a shared gpfs storage. Please, do not leave huge unused files in both home directories and gpfs storage areas. Quotas will be enforced in the near future.

...

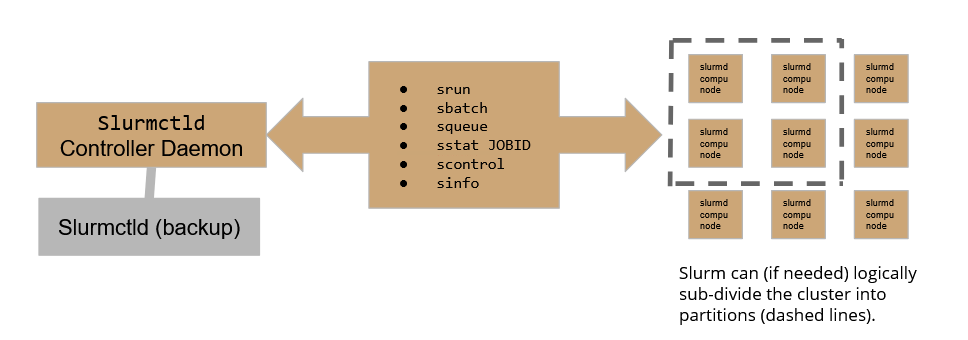

- SLURM architecture

On our HPC cluster, the user can leverage two queues:

<slurmprova> should be used for CPU-exclusive jobs (i.e. jobs that do not use GPU)

there are 4 partitions:

- slurmHPC_int: MaxTime for computation = 2h

- slurmHPC_inf: MaxTime for computation = 79h

- slurmHPC_short: MaxTime for computation = 79h

- slurmHPC_gpu: MaxTime for computation = 33h

if not requested differently at submit time, jobs will be submitted to the _int partition<slurmprovaGPU> should be used for CPU+GPU jobs.

- Check the cluster status with SLURM

you can check the cluster status in two ways: using the sinfo -N

...

command which will print a summary table on the standard output.

The table shows 4 columns: NODELIST, NODES, PARTITION, AND STATE

1) NODELIST shows node names. Multiple occurrences are allowed since a node can belong to more than one partition

2) NODES indicates the number of machines available

3) PARTITION which in slurm is a synonym of "queue" indicates to which partition the node belongs. If a partition name comes with an ending asterisk, it means that that partition will be considered the default one to run the job, if not otherwise specified.

4) STATE indicates if the node is not running jobs ("idle"), if it is in drain state ("drain") or if it is running some jobs ("allocated").

...

- Retrieve job informations

you can retrieve informations regarding active job queues with the squeue command for a synthetic overview of Slurm queues or alternatively you can use the scontrol show jobs job queue status.

Among the informations printed with the squeue command, the user can find the job id as well as the running time and statuscommand for a detailed view of jobs (both running and completed).

In case you are running a job, you can have a detailed check on it with sstat -j <jobid>, where jobid is a id given to you by Slurm once you submit the job.

- Submit basic instructions on Slurm

...

If the user needs to specify the run conditions in detail as well as running more complex jobs, it is strongly recommended (if not mandatory at all) to the user must write down a batch submit file (see below).

In the following, the structure of batch jobs alongside with few basic examples will be discussed.

- The structure of a basic batch job

...

A Slurm batch job can be configured quite extensively, so shedding some light on the most common sbatch options may help us configuring jobs properly.

#SBATCH --partition=<DUMMY_NAME><NAME>

This instruction allows the user to specify on which queue (partition in Slurm jargon) the batch job must land. The option is case-sensitive.

...

This instruction assigns a “human-friendly” name to the job, making it easier to retrievefor the user to recognize among other jobs.

#SBATCH --output=<FILE>

This instructions allows to redirect any output to a file (dynamically created at run-time)

#SBATCH --nodelist=<NODES>

...

N.B. Slurm chooses the best <INT> nodes evaluating current payload, so the choice is not fully entirely random. If we want specific nodes to be used, the correct option is the aforementioned --nodelist

...

the output should be something like (hostnames and formatting may change):

hpc-200-06-17 hpc-200-06-17 hpc-200-06-17 hpc-200-06-17 hpc-200-06-17 hpc-200-06-17 hpc-200-06-17 hpc-200-06-17

hpc-200-06-18 hpc-200-06-18 hpc-200-06-18 hpc-200-06-18 hpc-200-06-18 hpc-200-06-18 hpc-200-06-18 hpc-200-06-18

As we can see, the --ntasks-per-node=8 was interpreted by slurm as “reserve 8 cpus on node 17 and 8 on node 18 and execute the job on those cpus”:.

it is quite useful to see how the output would look in case of --ntasks=8 was used instead of --ntasks-per-cpu. In that case, the output should be something like:

hpc-200-06-17 hpc-200-06-18 hpc-200-06-17 hpc-200-06-18 hpc-200-06-17 hpc-200-06-18 hpc-200-06-17 hpc-200-06-18

As we can see the execution involved 8 CPUs only and the payload was organized to minimize the burden over the nodes.

...

Where the --bcast option copies the executable to every node by specifying the destination path. The final part of srun (the real instruction) should be then coherent with path you specified with bcastIn this case, we decided to copy the executable into the home folder keeping the original name as-is.

- Additional Informations

- complete overview of hardware specs per node: http://wiki.infn.it/strutture/cnaf/clusterhpc/home

...