Decommissioning of the HPC cluster

Since January 15th 2025, the historical CNAF HPC cluster has been decommissioned. This section of the guide is kept for some time for reference and will be heavily modified as the HPC bubbles will be installed and made available to users of projects that requested their usage through a formal request to ICSC via the PICA portal.

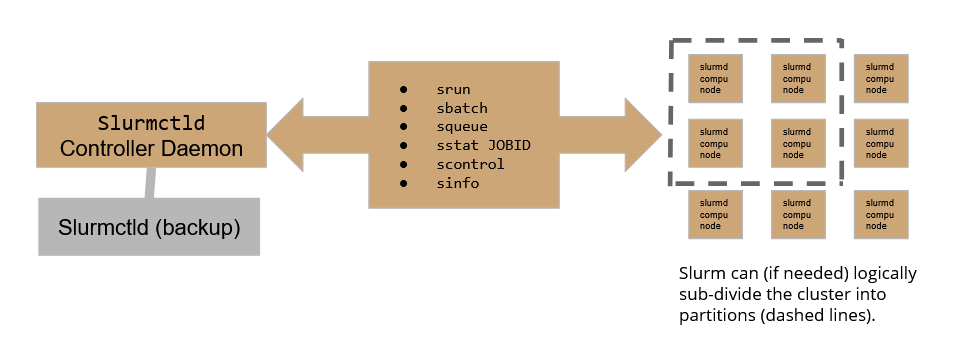

Slurm workload manager relies on the following scheme:

Where the Slurmctld daemon plays the role of the controller, allowing the user to submit and follow the execution of a job, while Slurmd daemons are the active part in the execution of jobs over the cluster. To assure high availability, A backup controller daemon has been configured to assure the continuity of service.

On our HPC cluster, there are currently 4 active partitions:

- slurmHPC_int MaxTime allowed for computation = 79h

- slurmHPC_inf MaxTime allowed for computation = 79h

- slurmHPC_short MaxTime allowed for computation = 79h

- slurm_GPU MaxTime allowed for computation = 33h

- slurm_hpc_gpuV100 MaxTime allowed for computation = 33h

Please be aware that exceeding the MaxTime enforced will result in the job being held.

If not requested differently at submit time, jobs will be submitted to the _int partition. Users can choose freely what partition to use by configuring properly the batch submit file (see below).

Check the cluster status with SLURM

You can check the cluster status using the sinfo -N command which will print a summary table on the standard output.

The table shows 4 columns: NODELIST, NODES, PARTITION and STATE.

- NODELIST shows node names. Multiple occurrences are allowed since a node can belong to more than one partition

- NODES indicates the number of machines available.

- PARTITION which in slurm is a synonym of "queue" indicates to which partition the node belongs. If a partition name comes with an ending asterisk, it means that that partition will be considered the default one to run the job, if not otherwise specified.

- STATE indicates if the node is not running jobs ("idle"), if it is in drain state ("drain") or if it is running some jobs ("allocated").

For instance:

-bash-4.2$ sinfo -N NODELIST NODES PARTITION STATE hpc-200-06-05 1 slurmHPC_int* idle hpc-200-06-05 1 slurmHPC_short idle hpc-200-06-05 1 slurmHPC_inf idle hpc-200-06-05 1 slurm_GPU idle hpc-200-06-06 1 slurmHPC_short idle hpc-200-06-06 1 slurmHPC_int* idle hpc-200-06-06 1 slurmHPC_inf idle [...]